The Stateless Ethereum Book

What is Stateless Ethereum?

Stateless Ethereum is an update to the Ethereum protocol, in which blocks become self-contained units of execution. There is no longer a need to download the entire state of Ethereum, as all the required information is packaged inside the block.

Why is statelessness important?

Stateless Ethereum brings forth many scalability and usability features that the Ethereum community has been anticipating for a long time.

In practical terms, this means:

- Reduced validator hardware requirements: IO, disk space, and computation.

- As a result, a higher gas limit, since the lower gas limit was imposed by these hardware requirements.

- Faster sync times, as a node doesn’t need more than an EL block to join the network.

- Easy implementation of state expiry, a feature that has eluded Ethereum since before 2018.

- Trustless light clients that directly follow the chain without needing a third party to provide the state.

- Better decentralization, as it makes it possible to cheaply create private pools.

Main benefits

Statelessness in Ethereum brings significant benefits by addressing critical scalability and decentralization challenges.

Scalability

By removing the need for clients to store a large amount of data, validators can process more transactions per block, increasing throughput. Thus, we enable:

- Higher TPS, since the IO bottleneck is the principal hindrance to increasing the gas limit.

- No required synchronization, since all the data needed to execute a block is packaged with it.

- Reduced disk footprint, for non-block builders, validators, wallets, and simple nodes. In the case of verkle trees, block builders will also benefit from a reduced disk footprint.

Decentralization

By reducing the hardware requirements for validating nodes, it becomes feasible for more participants to run nodes on lightweight devices. This fosters decentralization by:

- Lowering entry barriers, where new roles with reduced hardware and monetary investments are created, allowing new actors to help secure some aspects of the network (see rainbow staking).

- Enabling users to create private staking pools, where hardware and monetary resources can be pooled collaboratively to participate in the network.

- Reducing the trust placed in centralized data providers, removing the need for lightweight clients to trust a centralized entity.

Innovative features

Statelessness also opens the door to innovative features, including:

- State expiry, which limits the growth of historical state data.

- Rainbow staking, which enhances flexibility in staking mechanisms by creating many niches for low-stake nodes to participate in the network’s security.

- Secure light clients, which is the consequence of not having to trust a centralized authority when using the blockchain.

Ease of use

Additionally, by reducing the state proof size, statelessness facilitates more seamless cross-chain communication, laying the groundwork for improved interoperability between the Ethereum L1 and its L2s.

Purpose of this book

This book is designed to serve as a comprehensive resource for understanding and contributing to our work on Stateless Ethereum.

Goals of This Book

- Explain the vision: Provide an in-depth explanation of the motivation behind Stateless Ethereum, including its potential impact on scalability and decentralization.

- Technical guidance: Offer clear and detailed instructions for developers, researchers, and contributors to engage with and extend our work.

- Knowledge sharing: Educate readers about various aspects of stateless block execution and their role in achieving Stateless Ethereum.

- Encourage collaboration: Foster a community of like-minded individuals by providing resources, tools, and best practices for collaborative development.

Who is this book for?

This book is intended for:

- Developers: Interested in contributing to the implementation of Stateless Ethereum.

- Researchers: Exploring the new designs enabled by Stateless Ethereum and learning how client architecture is impacted by these choices.

- Learners: Seeking to deepen their understanding of this major evolution of the Ethereum protocol.

Trees

Overview

Changing the state tree(s) is one of the core protocol changes we must make to achieve a stateless Ethereum future. The main goal is to design a new tree for more efficient state proofs.

Current tree

The current data structure used to store Ethereum state is a Merkle Patricia Trie (MPT). This article explains more about this tree.

Relevant design aspects

Let’s explore the most important angles on a new tree design, addressed in Verkle Trees and Binary Tree proposals.

Arity

The current MPT tree has an arity of 16. The original goal for this decision was to reduce disk lookups, as it means the tree becomes shallower compared to a lower-arity tree. The downside is that state proofs are much larger. For more details, read the following rationale section in the Binary Tree EIP.

Merkelization cryptographic hash function

The cryptographic hash function used for tree merkelization can significantly impact state-proof verification. Verifying state proofs involves calculating hashes in a specific way to compare against the expected tree root.

There are two performance aspects:

- Out of circuit: How fast it is to calculate the hash function result on a regular CPU.

- In circuit: How fast this can be calculated within a SNARK circuit under a particular proving system.

Both types of performance are relevant since the protocol requires calculating hash functions for different tasks both out and in circuits. The current tree uses keccak, which has good out-of-circuit performance but is challenging to perform efficiently on most bleeding-edge proving systems.

Account’s code

The current MPT doesn’t store the account’s code bytecode directly in the tree; it only stores a commitment (i.e., the code-hash as keccak(code_bytecode)).

While this decision helps avoid potentially bloating the state tree with all the accounts’ code, it has an unfortunate drawback: since the tree stores the result of hashing the whole code, we still need to provide the full code as part of the proof if we want to prove a small slice of it.

This is the reason for the worst-case scenario of proving the state for an L1 block. You can craft a block that forces the prover to include a contract code of maximum size. A better tree design should allow for more efficient proofing of account code slices.

Proving parts of an account’s code is critical to efficiently allow stateless clients or block state proofs. During a transaction execution, only a small fraction of an account’s code is typically executed. Think, for example, of an ERC-20: the sender will execute the transfer method but never call other methods like balanceOf on-chain.

Proposed tree strategies

The new tree proposals address these problems by proposing:

- A more efficient encoding of data inside the tree.

- Including the account’s code inside the tree, allowing size-efficient partial code proving.

- A more convenient merkelization strategy to generate and verify proofs more efficiently.

Verkle and Binary trees share the same strategy for solving the first two points — we’ll dive deeper into them on the Data encoding page. Each proposes a different strategy for the last point, explained in their respective Verkle Trees and Binary Tree pages.

Data encoding

Overview

How the Ethereum state is encoded into the tree can significantly impact data inclusion and updating, proof generation, verification, and size. Verkle and Binary Trees share a new way of encoding the data into the tree, so we’ll explore more on this page.

Code-chunking

In the parent chapter, we mentioned that we need an efficient way of proving slices of any account code. The current proposal encodes the account code bytecode directly in the tree, compared to only storing the code hash as done today.

For key design simplicity, the tree leaves hold 32-byte blobs, which means we need a way to break down account code into 32-byte chunks. Although the most natural approach is partitioning the code into 32-byte chunks and storing it in the tree under some defined tree key mapping for each code chunk, this isn’t enough.

The reason is that there are EVM instructions, i.e., JUMP and JUMPI, whose arguments contain an arbitrary offset to jump. For the jump to be valid, the target location must be a JUMPDEST opcode (0x5B). Today, EL clients do a JUMPDEST analysis to detect all valid jump destinations in existing code — this analysis requires full code access to detect which bytes are JUMPDEST instructions.

In a stateless world, clients only have partial access to code, so they can’t do a complete JUMPDEST analysis. For example, an account code has an instruction PUSH5 0x00115B3344, which maps to bytecode 0x6400115B3344. If a JUMP(I) instruction jumps to the fourth opcode, you might think this is valid since it’s a 0x5B, but this byte corresponds to the data of PUSH5, not a valid JUMPDEST. A stateless client must be sure which 0x5B bytes correspond to real JUMPDEST to perform JUMP(I) validations without requiring all the account’s code.

This means that the code chunkification strategy should not only slice the account’s code into 32-byte chunks but also in a way that allows an EVM interpreter receiving these chunks to detect invalid JUMP(I) instructions.

In the 31-byte code chunker page, we explore the currently proposed code chunker in more detail. This book will soon include other proposal candidates for code chunkers, so watch for updates!

It’s worth noting that if the EOF upgrade is deployed to the mainnet, the problem of invalid jumps would be solved for contracts using this format. This doesn’t mean we can completely forget about it since only newly created EOF contracts could avoid this problem, but we still have to support legacy contracts probably forever.

Grouping

If we think carefully about how usual EVM code is executed in blocks, we can note two facts:

- Accounts’ basic data, such as nonce and balance, are usually accessed together.

- Whenever a storage slot

Ais accessed, there’s a high probability that nearby storage slots are also accessed. - Although code execution isn’t perfectly linear, if we execute code chunk

A, there’s a high chance we’ll execute code chunkA+1.

In other words, state access during a block execution isn’t random.

This is an optimization opportunity since if we group states frequently accessed together in the same tree branch, proving the whole state requires fewer tree branches, making the state-proof size smaller. This grouping is also convenient for future ideas such as spreading tree state into a separate network such as the Portal Network.

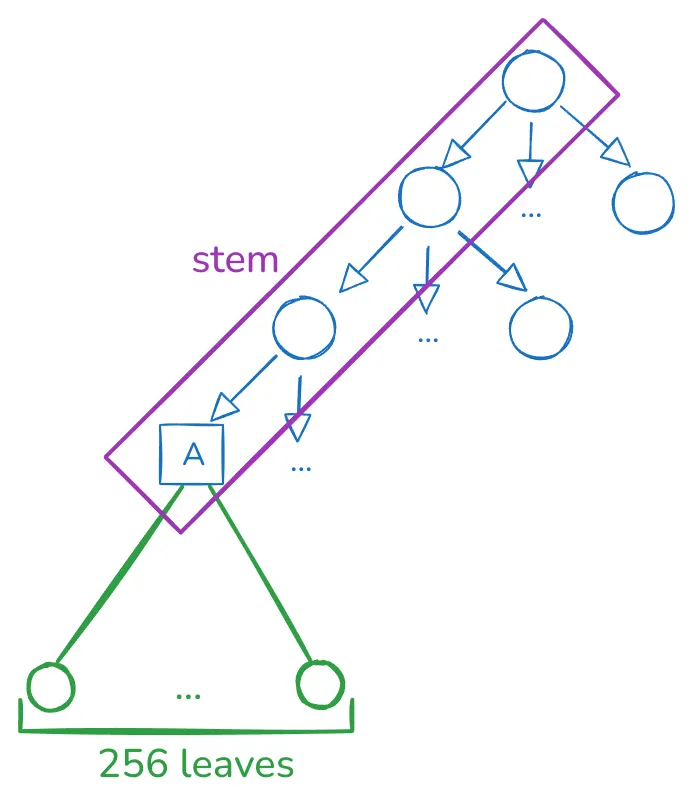

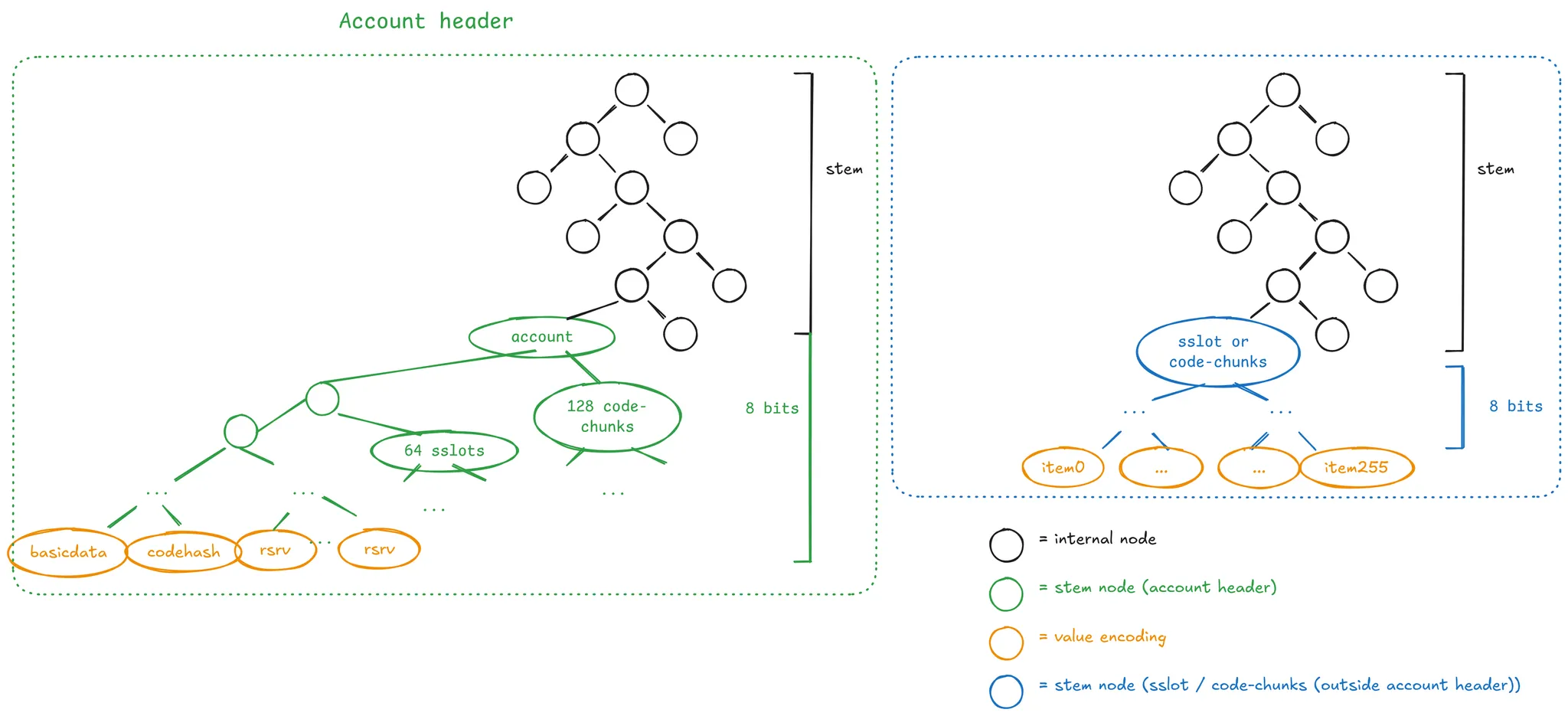

The current proposal is creating groups of 256 leaves, which can be depicted in the following diagram:

Please don’t focus on the tree arity since this will depend on the underlying tree design. The main point is that given a stem, we encode 256 leaves in that single branch, and we expect these values to have a high probability of being accessed together. Choosing a size of 256 is entirely arbitrary, but for historical reasons rooted in Verkle Trees proving efficiency. For Binary Trees, there’s more flexibility in choosing a different size.

Note that these 256 values can contain any arbitrary data. The current proposal has the following stem types:

- An account stem contains:

- One leaf encodes the nonce, balance, and code size.

- One leaf encodes the code hash.

- 64 leaves being the first 64 storage slots.

- 128 leaves being the first 128 code chunks.

- The unused leaves are reserved for potential future use.

- An account storage stem contains 256 consecutive storage slots for an account, excluding the first 64 storage slots that live in the account stem.

- An account code stem contains 256 consecutive code chunks of the account’s code, excluding the first 128 code chunks that live in the account stem.

Each account property (nonce, balance, etc.), storage slot, and code-chunk grouping is done by defining a proper tree key mapping. The way this is done depends on the specific tree proposal. Still, the general strategy is defining a function that generates the first 31 bytes for the 32-byte tree key, defining the stem, and the last byte indicates which of the 256 leaves corresponds to the required data.

For example, as mentioned in the above bullets, the storage slots 3 and 4 live in the account stem. The account stem defines the first 31 bytes of the tree key, so both storage slots share this byte prefix. Their last tree-key byte is HEADER_STORAGE_OFFSET+3 and HEADER_STORAGE_OFFSET+4, respectively. HEADER_STORAGE_OFFSET currently is 64, meaning in the group of size 256, we store the first 64 slots at offset 64.

31-byte code-chunker

Background reading

To get a proper background on where this code chunker fits into stateless Ethereum, read the Trees introductory chapter and the Code chunking section of Data encoding.

How does it work?

Let’s look at the following example code:

PUSH1 0x42 # 6042

PUSH1 0x00 # 6000

MSTORE # 52

PUSH2 0x0001 # 610001

PUSH2 0x0002 # 610002

ADD # 01

MSTORE # 52

PUSH20 0x0000000000000011223344556677889900115b33 # 73<...>

RETURN # F#

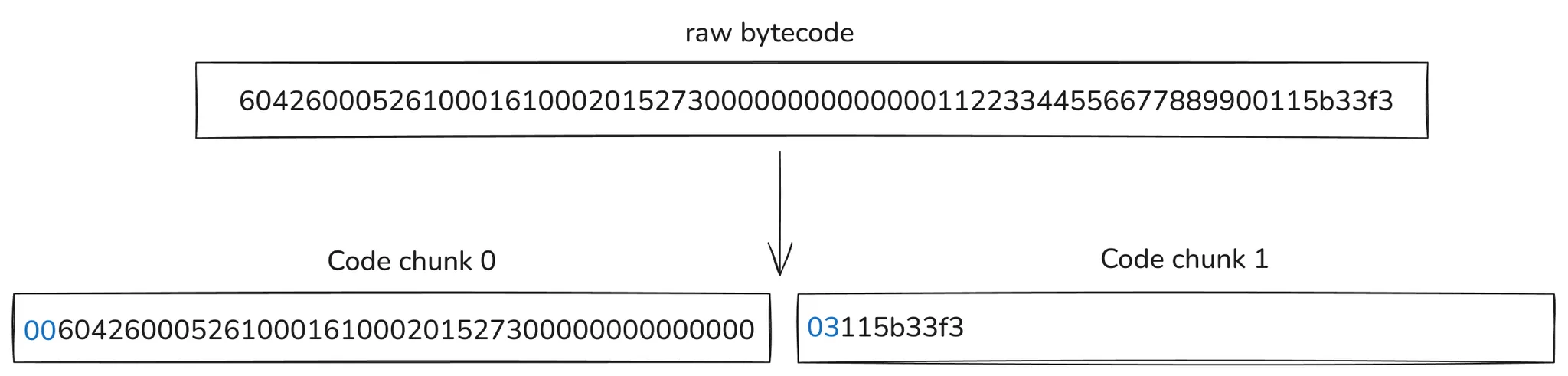

The following diagram shows how the code is chunkified:

Recall that an account code is a blob of bytes containing all the contract instructions. The goal of the code chunker is to:

- Output a list of 32-byte blobs, which will be stored as tree leaves.

- Given a code chunk without any extra information, an EVM interpreter should be able to detect if a JUMP to any byte in this chunk is valid.

Requirement 1 is easy to understand since each tree leaf stores 32-byte blobs. We can appreciate this requirement being fulfilled since the presented Python code returns a Sequence[bytes32].

Requirement 2 emerges from the fact explained in the Code chunking section in the current chapter. Since any JUMP(I) can jump to any byte offset in any chunk, an EVM interpreter should be able to detect if this jump is (in)valid without any further information than the code chunk itself.

The way the 31-byte code chunker resolves this is as follows:

- The account bytecode is partitioned into slices of 31 bytes in size.

- The first byte contains the number of bytes starting from the 31-byte slice that account for PUSHDATA, i.e., a previous

PUSH(N)instruction data.

In the diagram, this first byte is shown in blue. Note that the second code chunk indicates that the first 3 bytes correspond to the previous PUSH20 instruction. This allows the stateless client to note that the 0x5b byte in this code chunk isn’t a valid JUMPDEST!

Using this 1-byte information at the start of the code chunk allows the EVM interpreter to detect if any jump to an offset in the code chunk is valid, i.e., 0x5B is an actual JUMPDEST. Given that the bytecode length of a contract is N bytes, we know that the number of required chunks is ceil(N/31). If you’re interested in a Python implementation of the chunker, see the second code snippet here.

The design of this code chunker is very simple, but the encoding efficiency is not optimal. For example, if a bytecode doesn’t contain any PUSH(N) instruction, then we know any JUMP(I) is valid, but we’re still only encoding 31 bytes of actual code in 32-byte code chunks. Similarly, if this is an EOF contract, by construction, we know all jumps are valid; thus, we don’t require the extra byte. Soon, we’ll describe other code chunkers with different tradeoffs.

32-byte code-chunker

Background reading

To get a proper background on where this code chunker fits into stateless Ethereum, read the Trees introductory chapter and the Code chunking section of Data encoding.

How does it work?

This approach aims to store code in full 32-byte chunks while efficiently encoding the necessary metadata to validate jump destinations. This proposal contrasts with the 31-byte chunker by storing metadata separately rather than prepending it to each chunk.

The goals remain similar:

- Output the code itself as a list of 32-byte blobs (chunks), which will be stored as tree leaves.

- Provide a mechanism, using separate metadata, for an EVM interpreter to detect if a

JUMP(I)to any byte offset is valid (i.e., targets aJUMPDESTopcode and not PUSHDATA).

Instead of adding metadata to every chunk, the “Dense Encoding” variant method focuses only on potential ambiguities:

- The account bytecode is partitioned into full 32-byte chunks.

- We identify only the chunks that contain invalid jump destinations. An invalid jump destination is a

0x5bbyte that occurs within the data part of a PUSH instruction. - A map

invalid_jumpdests[chunk_index] = first_instruction_offsetis created. first_instruction_offset indicates the offset within the chunk where the first actual instruction begins. - This map is then encoded very efficiently using a Variable Length Quantity (VLQ) scheme (specifically, LEB128) applied to a combined value representing the distance between invalid chunks and the first_instruction_offset.

- This densely encoded metadata is stored probably prepended as a custom table for legacy contracts, i.e., before the actual originalbytecodes. EOF contracts can’t have invalid jumps since this is validated at deployment time.

Runtime usage

When a JUMP(I) occurs:

- The EVM checks if the target byte offset contains the

JUMPDEST(0x5b) opcode. - If the chunk index is not in the

invalid_jumpdestsmap, the jump is valid (assuming step 1 passed). If it is present in the map, the EVM must perform a quick analysis of that specific chunk, using the associatedfirst_instruction_offsetfrom the map, to parse the instructions within the chunk and confirm whether the target 0x5b is indeed aJUMPDESTopcode and not part of PUSHDATA.

Efficiency

This method leverages the observation that invalid jumpdests are rare in typical contracts. The code itself is stored in full 32-byte chunks (ceil(N/32) chunks for code length N). The metadata overhead is very low on average (~0.1%) and has a worst-case overhead of 3.1% (if every chunk contained an invalid JUMPDEST). For example, for contract size limits of 24KiB, 64KiB and 256KiB the maximum table overhead would require 24, 64 and 256 code-chunks respectively, assuming a best case table encoding of 1 byte per entry. Note that after 128KiB the table won’t fit into the 128 code-chunks reserved in the account header.

Implementations

For a Python implementation, you can refer to the spec. There is also a Go implementation.

Verkle Tree

Overview

Verkle Trees were the first tree design considered a viable solution for stateless Ethereum. The roots of this idea are in a paper published by John Kuszmaul. This idea superseded older Binary Tree approaches from years ago when SNARK proofs were still sci-fi technology.

Due to advancements in SNARK proving systems performance and concerns about quantum risks, Verkle Trees might be superseded by Binary Trees. This is still under discussion; stay tuned!

Role of vector commitments in the design

At the core of Verkle Trees’ design is the use of a cryptographic component named vector commitment.

Without getting into formal definitions, this construct allows us to fill a vector with a fixed size N and:

- Compute a commitment to the vector, a succinct fingerprint of its contents.

- Given the commitment, we can efficiently generate a small proof to prove that a particular entry in the vector has a defined value.

- For Verkle Trees,

N = 256for proof efficiency reasons.

This construct addresses a fundamental problem with high-arity trees, such as the current Merkle Patricia Trie (MPT). For an MPT Merkle proof, each internal node in a branch must provide 15 siblings so the verifier can calculate the corresponding hash, verifying all the branches chain up to the root. This means the arity is a significant amplification factor for the proof size.

If we instead use vector commitments to represent child commitments, the construct allows us to generate proof only for the required children. Said differently, the proof size isn’t linear to the vector length.

The cryptography around the vector commitments is based on Inner Product Arguments and Multiproofs. This construction allows the generation of proofs without trusted setups and aggregates multiple vector openings into a single proof. This means that if we need to prove the openings of multiple vectors at different positions, we can generate a unique short proof of all those openings. This is precisely what we need for state proofs in this tree since proving a branch means doing one opening per internal node in the branch to connect all the vector openings up to the expected root.

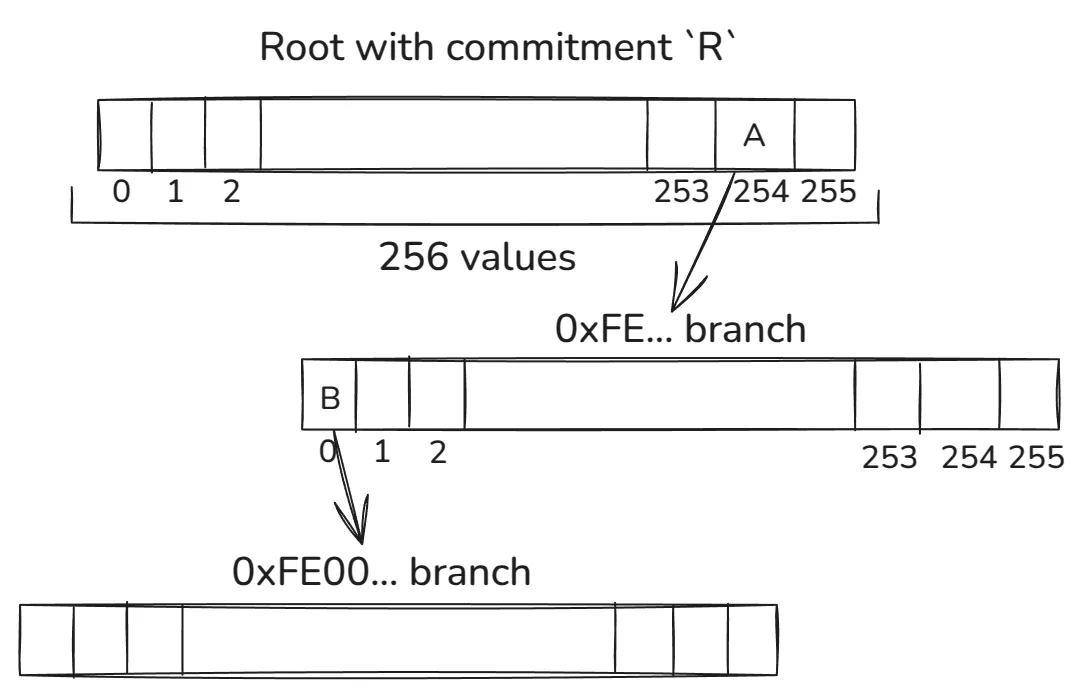

Tree design

A tree key is still a 32-byte blob. The first 31 bytes define what’s called a stem. A stem decides the main branch from which all 256 values for that stem will reside. Note that the last byte of the tree key defines exactly 256 values; thus, we can conclude that the first 31 bytes define the tree key path, and the last byte defines which bucket from the 256 items contains the value.

Let’s look at the following diagram to understand how the stem maps to the tree path:

Each byte of the stem defines which item from each internal node from each level is used to walk down the path. This is shown by the 0xFE... and 0xFE00... examples depending on which path we use to walk down the tree. The A and B are vector commitments to the corresponding pointed vectors.

Given a stem, we always walk downstream until we reach a point where no other stem exists in the corresponding sub-tree. At this point, we insert the leaf node for the stem.

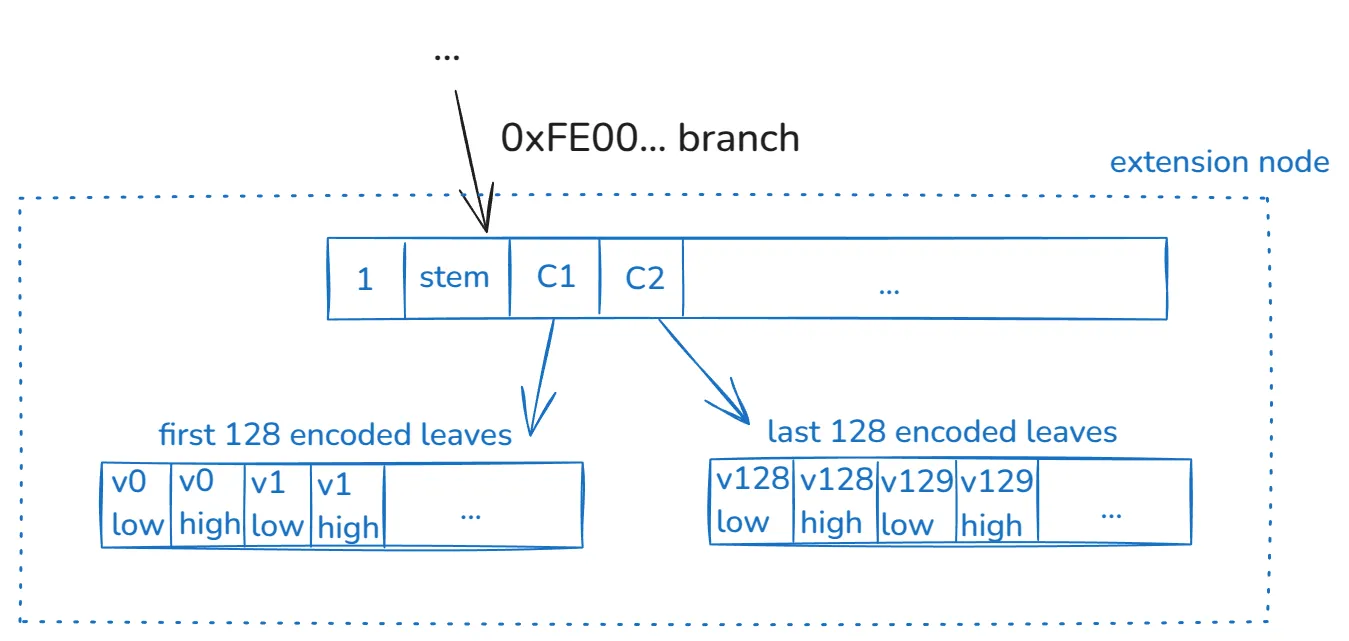

Continuing with our example, we see that after the first two levels, we reached an extension node:

This construct (extension node) encodes the 256 values of the stem. As previously mentioned, in this example, no other stems exist in the tree with the prefix 0xFE00— if that were the case, we’d have more internal nodes branching the tree.

This extension node is constructed in the following way:

- As usual, a 256 vector with the first 4-items encoding:

- The value

1to prove this vector corresponds to an extension node. - The

stemvalue. Recall that the path can’t fully describe this value. C1andC2, which are commitments to two vectors.

- The value

- A 256 vector encoding the first 128 values of this stem. Each value is represented in two items. The commitment of this vector is the

C1mentioned above. - An analogous 256-vector mentioned in the previous bullet, but for the last 128 values of this stem, which has commitment

C2.

The main reason why we need to encode each 32-byte value in two buckets is related to the vector commitment construct. Each item in the vector is a scalar field element of a defined elliptic curve. This scalar field size is less than 256 bits. Thus, we need two finite field elements to encode a 32-byte value. Encoding also has a further rule distinguishing between written zeros and empty values.

We suggest reading this article to understand other details about the design.

Proof Construction

This section provides a high-level overview of how the proof is constructed.

At a high level, a block execution requires proving a set of key values from the tree so a stateless client can re-execute the block. Each key-value corresponds to a branch and a particular item in its corresponding extension node. Multiple key values might share the same main branch (i.e., stem).

The proof needs to do each corresponding vector opening from the extension node up to the tree root. In our example above, if we want to prove v1 value, we’d have to do the following openings:

- For

C1at position 2 proving has valuev1_low. - For

C1at position 3 proving has valuev1_high. - For

Bat position 0 proving has value1. - For

Bat position 1 proving has valuestem. - For

Bat position 2 proving has valueC1(note that openingC2is not needed!). - For

Aat position 0 proving has valueB. - For

Rat position 254 proving has valueB.

Given the list of key values to prove, many might share openings that are only done once. The proof contains extra information to decide how the verifier should expect each stem to map to tree branches.

Note that the prover does not provide R since it’s known to the verifier, i.e., it is the state root of the tree. As mentioned before, all these vector openings are batched in a single proof, which compresses all the openings in a single short proof.

We recommend reading this article if you want a more in-depth explanation of proof construction.

Binary Tree

Note: The following explanation is based on a recent proposal from Binary Trees. The design is expected to change as more people from the community provide feedback.

Overview

Using Binary Trees for the state tree is not a new idea. Back in 2020, this idea was explored in EIP-3102. Then, SNARKs were still in the early stages, and soon after, Verkle Trees became a more promising approach, so this direction was abandoned.

Many years have passed, and around mid-2024, two main motivations started to reignite the possibility of reconsidering Binary Trees compared to Verkle Trees.

The first one was some breakthroughs in quantum computers, which raised the concern that they could be a potential risk in 10 or 15 years. There’s no consensus around interpreting recent events, so there’s still the possibility that they will take many more years or never be a real risk for elliptic curve cryptography. Depending on how conservative core developers want to be, this might be a significant decision factor. Also note that if Verkle Trees are deployed, at least one extra state tree conversion is guaranteed to happen.

The second one is the rapid pace of improvement of SNARK/STARK proving systems. The speed at which they can provide proving throughput for hashes has been improving rapidly without requiring absurd hardware specs. Depending on the hash function used, the proving performance is or isn’t enough for L1 needs — we’ll dive more into this later.

In summary, a Binary Tree construction is now again a potential option for a SNARK-friendly state tree with other tradeoffs compared with the Verkle Tree.

Tree design

The currently proposed EIP-7864 pulls many ideas from EIP-6800, which are still helpful. These include many of the desired properties described in the Data Encoding page.

The tree construction is more straightforward than Verkle Trees since it relies only on a hash function for merkelization. The arity of the tree is defined as two since this arity minimizes the proof sizes. To learn more details, refer to the corresponding rationale section of the EIP.

Given a tree key, we define the first 31-bytes as the stem. This stem defines the main path where the 256 values are determined by the last byte of the tree key. This is better described by the diagram presented in the EIP:

Given a tree key value to be inserted:

- With the first 31-bytes, we walk the black nodes (i.e., internal nodes). Starting with the most significant bit, each bit walks the tree downstream from the root.

- When we reach an internal node representing an empty subtree, we insert the leaf-level subtree, a full Merkle tree with 256 leaves. Said differently, the 256 values for this stem are a full tree with 8 levels.

- Depending on the last byte of the tree key, we store the desired value in the corresponding leaf.

This idea is the same as Verkle Trees, where each stem stores 256 values corresponding to the last byte of the tree key. Instead of using vector commitments, we use a full Merkle tree as a form of vector commitment.

The tree is merkelized using a hash function which allows us to hash 32-bytes -> 32-bytes and (32-bytes, 32-bytes) -> 32-bytes. Any secure hash function can be used with this construction. The current EIP proposed Blake3 as a conservative one, but this isn’t fully decided—more about it in the next section.

Proof Construction

If we need to build a Binary Tree proof for a list of key values, we have two options:

- Build a usual Merkle Tree. This is not different from how you can build proofs in the current Merkle Patricia Tree. The construction will be much more straightforward, i.e., we don’t have accounts and storage tries but a unified state tree, no RLP is used for encoding nodes data, etc. Also, the arity of the tree generates smaller proofs.

- For an L1 block required pre-state, a worst-case scenario still generates a tree proof that is bigger than desired. For these cases, we can create a SNARK/STARK proof, which is a Merkle Proof verifier in a proven circuit. This requires more work to generate, but the proof is smaller.

Hash function for merkelization

When using SNARKS/STARKs to generate a state-proof check, the proving performance is heavily influenced by the hash function used for merkelization. Normal cryptographic hash functions such as Keccak and Blake3 aren’t designed to be efficiently proven in circuits. Other cryptographic hash functions, such as Posiedon2 (i.e., arithmetic hash functions), are specifically designed to be efficiently proven in SNARKs. Their main difference is that arithmetic hash functions don’t rely on bitwise operations but directly work on desired finite fields matching the underlying proving system.

The current proving performance on desired hardware specs for normal hash functions such as Keccak or Blake3 is still an order of magnitude slower than required for L1 blocks. Hence, we must wait for them to keep improving in performance or for them to need more powerful hardware for block builders.

However, if we have an arithmetic hash function such as Poseidon2, the current proving performance on target hardware is more than enough for L1 block needs. The main drawback is that Poseidon2 is still not considered safe for use in L1. The Ethereum Foundation cryptography team has recently launched a public initiative to assess its security more formally. From another perspective, multiple zk-L2 have been using Poseidon for some years, which indirectly means billions of dollars publicly at stake — while this isn’t a formal bug bounty program, any black hat hacker that knows how to break the hash function could already attack these networks. This doesn’t prove Poseidon is safe, but it gives an optimistic perspective while the formal assessment is done.

Ideally, the Binary Tree should use Poseidon2 since it offers the best-proving performance. This allows block builders to have lower hardware specs, which is good for decentralization. Moreover, it’s crucial that proving the hash function doesn’t get in the way of bumping the block gas limit to keep improving L1 scaling. Hopefully, protocol developers can make a final call on this front within the next year.

BLOCKHASH state

Motivation

In a stateless Ethereum world, we require that a block provides only the data needed for its execution, compared to today’s reality of forcing the clients to store the whole state. This applies not only to plain re-execution but also to SNARK proofs.

Usually, all the required state for executing EVM instructions rely on data stored in the state tree. For example, CALL, BALANCE, SLOAD, and EXTCODEHASH always involve reading the state to resolve their execution. Since this data lives in a merkelized tree, we can generate state proofs to provide this data trustlessly.

There’s a particular exception for BLOCKHASH. Before the Pectra update (planned for Q2 2025), executing BLOCKHASH relied on information not stored in the tree. This meant that execution clients must store the last 256 block hashes in a separate storage. This isn’t a big ask since full nodes store the whole blockchain anyway, so accessing this information was easy. While the blockchain is a merkelized structure, and you could provide proof for the block hashes, this would require up to 256 block headers for the evidence, which is prohibitive.

Setting up the stage…

In Pectra, the protocol took an important step towards fixing this problem by delivering EIP-2935. This EIP adds a new rule to the protocol where each new block includes the previous block hash in a system contract.

This means that BLOCKHASH can now be resolved from the state tree, as all other EVM instructions do. EIP-2935 doesn’t force BLOCKHASH to use the state tree, so clients shouldn’t change how they execute BLOCKHASH.

Fixing the last missing piece…

The last required change is finally requiring BLOCKHASH to be resolved from the state tree rather than from the blockchain. This is where EIP-7709 comes into play.

The change is quite simple. BLOCKHASH now involves a state tree access, which can be done by reading the corresponding storage slot from the EIP-2935 contract. This implies that the gas cost for the instruction should now map to an equivalent SLOAD.

Backward compatibility

Despite EIP-2935 storing 8191 historical block hashes, the BLOCKHASH instruction only serves the last 256 values. This is required to avoid a breaking change in the instruction semantics (i.e., current BLOCKHASH executions asking for older values must still return 0).

Gas cost remodeling

Recommended reading

It’s highly recommended that you read the Trees chapter and Data encoding page since those changes are fundamental to the need for a gas model reform.

How are costs related to stateless Ethereum?

As discussed in other chapters, multiple protocol changes are needed to achieve Ethereum statelessness. Some of these changes must impact gas costs since they are profound changes that should reflect new CPU or bandwidth costs or mitigate new potential attack vectors.

Changing gas costs is always a complex problem since the gas cost model is part of the blockchain’s public API. Although the protocol can’t promise fixed gas costs forever, it’s usually something that we must think carefully about since it can break existing contracts that have baked-in assumptions. Not all gas cost changes are negative, but the overall impact (positive or negative) depends on each case.

What are the main drivers for gas cost changes?

The following are reasons that motivate doing a gas cost remodeling:

- The block will contain an execution witness that includes new information—at a minimum, it will contain proof. The block size is affected by EVM instructions, which depend on the state.

- The new trees have a new grouping strategy for storage slots, which attempts to lower the gas cost access.

- Code is also included in the tree, so gas must be charged to access it when contracts are executed.

The only EIP proposed to address these changes is EIP-4762, which is currently focused on the Verkle Trees as the new tree. For Binary Trees, the execution witness is not entirely clear today. The book will contain an explanation whenever the roadmap is clearer on this front, so stay tuned!

EIP-4762

Overview

This EIP can initially be intimidating, but the underlying principle is simple. Every direct or indirect state access required in a block execution must be included in the execution witness. Not doing so means that if a stateless client attempts to re-execute this block, it will be unable to do so due to a lack of information.

This EIP’s goal is to define new gas cost rules to account for:

- Chunkification of account properties (e.g., nonce, balance, code).

- Disincentivize growing the block execution witness.

- Avoid changing current costs as much as possible to limit UX impact.

A necessary clarification is that this EIP is currently designed for the Verkle Trees proposal.

Dimensions of gas cost changes

Let’s look at the different dimensions of gas cost changes.

EVM instructions new gas costs

The following instructions have a new gas cost:

CALL,CALLCODE,DELEGATECALL,STATICCALL,CALLCODESELFDESTRUCTEXTCODESIZE,EXTCODECOPY,EXTCODEHASHCODECOPYBALANCESSLOAD,SSTORECREATE,CREATE2

These instructions share the common feature of accessing the state to perform their action; thus, their gas cost should reflect the new reality.

Let’s look at some examples:

BALANCE: when this instruction is executed, theBASIC_DATAleaf for the target address is accessed. This leaf should now be part of the witness.SLOAD: might be the most obvious example, since the target storage slot leaf will be included in the witness.EXTCODECOPY: recall that now the account code is part of the tree, so accessing the code of any account means state access, which is included in the witness.

Code execution

As explained in the Accounts code section of Trees chapter, account code is now part of the tree. This is required since stateless clients need the code to re-execute the block. Instead of providing all the account codes, we only need the code chunks effectively accessed during block execution (which is usually far less than the complete code).

The EVM interpreter must include any code chunk containing executed instructions to do this. Note that if two or more instructions from a given code chunk are executed, the code chunk is only included and charged once. The included code chunks aren’t always consecutive since instructions like JUMP or JUMPI can jump to a different code chunk.

Note that most opcodes only span a single byte of a code chunk, except instructions with immediates. For example, when executing a PUSH(N) instruction, we must account for 1+N bytes in the code since the immediate should also be included. This is important since a PUSH(N) corresponding bytecode could span multiple code chunks.

Out of gas and REVERT

It’s worth noting that if a transaction execution runs out of gas or executes a REVERT instruction, everything added to the witness isn’t removed. This should feel natural since when a stateless client re-executes the block, it still needs all the code chunks and state required to reach the reversion point.

Transaction preamble

In today’s rules, an intrinsic cost of 21_000 gas includes warming rules or particular accounts, such as the transaction origin. This is required since we have to validate that the transaction is valid by doing nonce and balance checks. All these implicit (or rather, included) accesses within the intrinsic cost are also included in the execution witness at today’s cost.

Contract creation

Creating a contract has some preliminary rules that must be enforced. For example, we must do a collision check on the new contract address since we can’t overwrite the existing contract code. This means the execution witness will include account field accesses since stateless clients require this data for validity checks.

Recall that the contract code is now stored in the tree. This means that the transaction that creates a contract now performs writes into the tree, which should be accounted for. The current gas rules indicate that 200 gas per contract byte should be charged — this rule has been removed and simplified to be considered a tree write. This new reality must be reflected in the gas costs.

Block-level operations

A block execution entails more actions than transaction execution. For example, staking withdrawals are operations done after the list of transactions is executed—these actions also perform read/write operations into the tree. These operations don’t have any gas cost, but they should still be accounted for as information to be included in the execution witness.

Precompiles & system contracts

Precompiles and system contracts have some exceptions to the rules described in this EIP.

Regarding precompiles, if we do a non-value bearing CALL to a precompile, we are not strictly required to include its BASIC_DATA, which contains the code size. Usually, the code size is needed so a stateless client can detect jumps beyond the contract size. But precompiles don’t have bytecode contracts, and we know their size is always 0.

We can look at an example of system contracts. By EIP-2935, a system contract stores the previous block’s hash. Although in the previous section, we mentioned that contract execution must include all code chunks, the bytecode for this system contract is defined at the spec level—stateless clients already have the contract’s bytecode, so we can avoid including it in the witness.

Access events

All the above rules are handled in a unified way via access events. Access events keep track of all state access (read/write) required during a block execution, which is needed for correctly charging the gas costs.

These are the relevant constants to understand how gas is charged:

WITNESS_BRANCH_COSTwhen a new tree branch has a read access for the first time.WITNESS_CHUNK_COSTwhen a specific leaf has a read access for the first time. Recall that tree branches contain 256 leaves.SUBTREE_EDIT_COSTwhen a branch must be updated. This means that at least one write was done in this branch leaves.CHUNK_EDIT_COSTwhen an existing leaf is overwritten with a new value.CHUNK_FILL_COSTwhen a non-existing leaf is written for the first time.

Every time a state read/write is included as an access event, we must check if the operation should charge each of the costs mentioned above. These costs are charged once per location, per transaction execution. For example:

- A second write to a storage slot only charges warm-cost since the branch and leaf inclusion were already charged on the first access.

- If the storage slot

0of a contract is accessed for the first time, onlyWITNESS_CHUNK_COSTis charged. Recall that the first 64 storage slots live in the same branch as accounts fields. Some form of “call” that started this contract code execution already paid forWITNESS_BRANCH_COST, so we only need to chargeWITNESS_CHUNK_COST. - When the first byte of a code-chunk is accessed, we charge

WITNESS_BRANCH_COST+WITNESS_CHUNK_COST. Access costs for the subsequent instructions executed in the same code chunk aren’t charged. When bytecode execution overflows into the next chunk in the same branch, onlyWITNESS_CHUNK_COSTis charged. And so on.

State conversion

Introduction

State conversion is an important and complex topic for a stateless Ethereum. As mentioned in the Trees chapter, one required protocol change is changing the tree used to store the Ethereum state. Although it is easy to spin up a new blockchain with a new shiny tree, life is more complicated when you need to do it with an existing tree storing a state of ~300GiB.

This chapter explains how the protocol will switch the state tree to the new target tree while attempting to run seamlessly, flawlessly, and safely with minimal user impact.

In sub-chapters, we dive deeper into explaining more low-level spec proposals on how this is done in more detail and intricacies. We suggest readers read sub-chapters in order since each will rely on a good understanding of the previous ones.

Motivation

To understand why we need to care about this problem, please read the Tree chapter of this book. Going forward, we only need to understand that a new tree should be used, and somehow, it has to be introduced into the protocol.

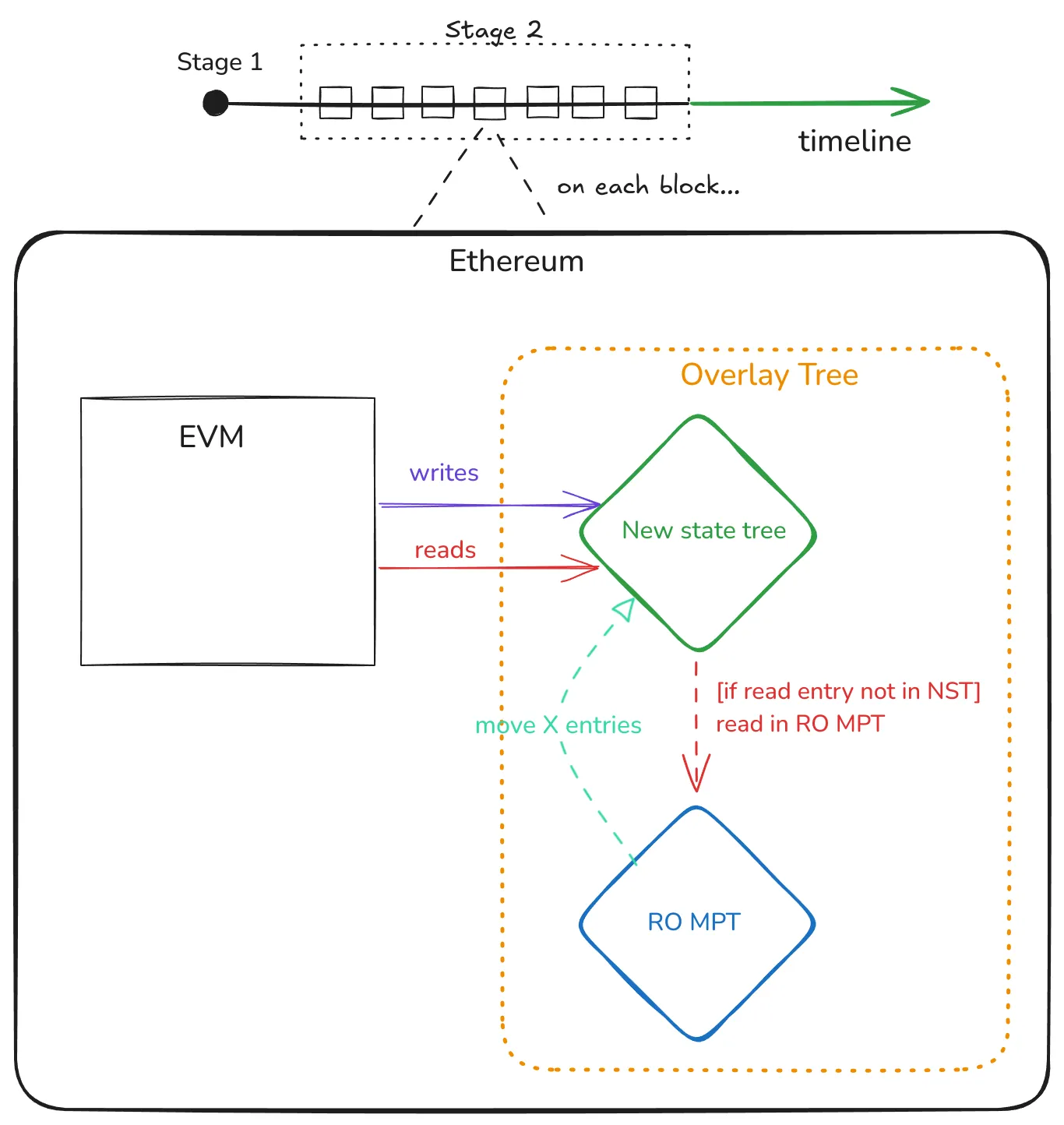

Today’s proposed strategy is converting the data with an Overlay Tree, which we explore in more detail in sub-chapters, but for now, let’s stick to the big picture.

Big picture

Here’s a summary of what we want to achieve:

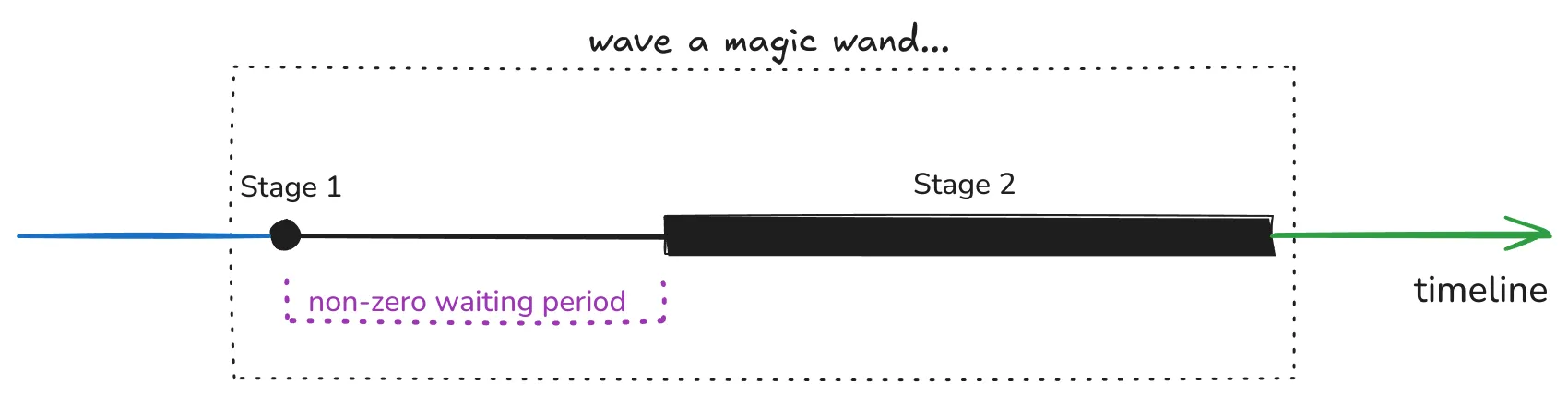

Unfortunately, we can’t wave a magic wand and call it a day, so the current proposal will go in two phases.

Note that these two phases will happen while the chain runs as usual, so we’re changing the system’s database while the system is running! Previous research has explored offline conversion methods with other tradeoffs and risks.

Stage 1 - Introduce the new tree

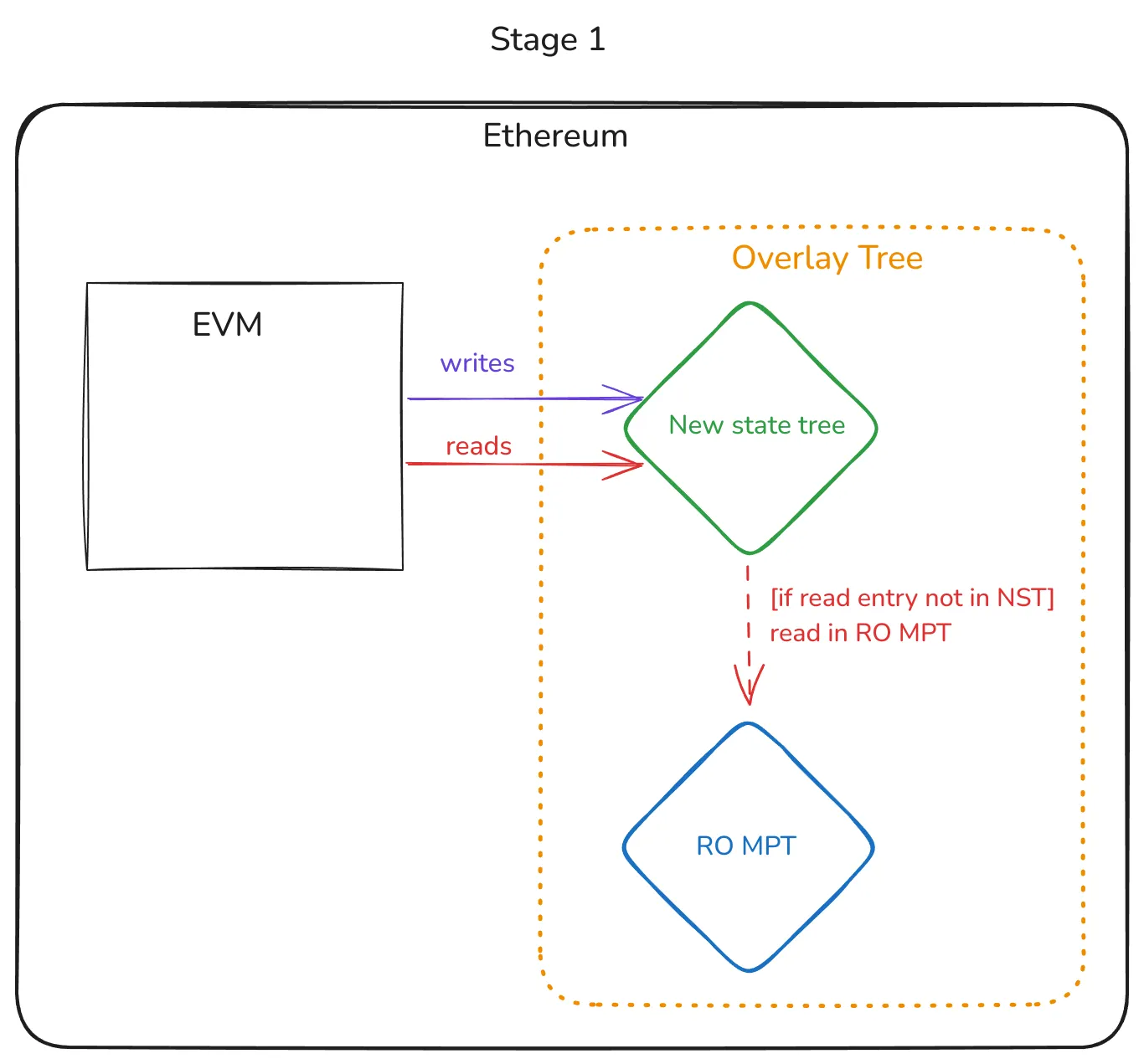

In Stage 1, a protocol change is activated (EIP-7612), which:

- Makes the MPT read-only (i.e., RO MPT).

- Introduces the new (empty) state tree.

- Any new write produced by executing transactions in a block is done in the new tree.

- Any read is first done in the new tree; if the key isn’t found, it is done in the RO MPT.

Note that in the timeline shown before, this stage 1 is a single point in the timeline. As soon as the timestamp activates EIP-7612, the goal of the EIP (i.e., introducing the new tree) is done. This EIP doesn’t deal with moving existing data, which is done by other EIPs, which we will explain soon.

We dive deeper into EIP-7612 in its corresponding sub-chapter.

Waiting period…

There is a required period after Stage 1 is activated before Stage 2 can be activated. The goal is for the RO MPT from Stage 1 to be final (i.e., the chain reaches finalization).

This is critical so no chain reorganization can mutate the RO MPT. Doing this greatly simplifies the implementation of EL clients since they know that not only is the MPT read-only but it’s completely frozen. Moreover, it simplifies the preimage generation and distribution tasks, but we expand on this later in the book.

Stage 2 - Move the existing data from the MPT to the new tree

Let’s unpack what this stage is about:

- The functioning of the Ethereum chain continues to be as we described in Stage 1, with a (finalized!) RO MPT, and the new tree with the defined read/write rules.

- An extra rule is added: on every block, we move a defined (X) number of entries in the RO MPT to the new tree. This is when we start moving the data from the old to the new tree.

- Since the MPT is read-only and we always make progress on each block, we’ll eventually reach the end of the conversion.

Note that this is a high-level explanation of the idea; we’ll dive into how this works in the EIP-7748 sub-chapter.

How are contracts, clients, and users affected during this conversion phase?

This is an excellent and relevant question; we can separate it into multiple dimensions.

Users sending transactions

The state conversion doesn’t change how transactions are created and sent to the blockchain.

EVM contract execution

From the perspective of EVM execution, the state is accessed through usual opcodes without knowing where this is coming from or if data is being moved between trees in the background.

As explained in the EVM gas cost remodeling chapter, Stage 1 (EIP-7612) is bundled with EIP-4762, which changes gas costs which isn’t opaque to the EVM. However, note that these gas cost changes are unrelated to the state conversion but are using the new tree, so the state conversion per se isn’t related to this effect.

State proofs

Recall that we have three periods:

- Before Stage 1

- This is how the chain works today — proofs can be created with the known drawbacks as usual.

- Starting from Stage 1 and continuing until Stage 2 is finished.

- During this period, the chain uses the described Overlay Tree, which is composed of two trees. Creating state proofs during this period is very challenging. Given a key in the state, this key active value might be in the new tree or still in the RO MPT.

- A proof of absence for a key requires a proof of absence in both trees.

- A proof of value in the RO MPT requires a proof of absence in the new tree (i.e., prove that the value isn’t stale).

- The root of the RO MPT isn’t planned to be part of the block since the new state root will be the one from the new tree. This adds extra complexity to proof verification.

- During this period, the chain uses the described Overlay Tree, which is composed of two trees. Creating state proofs during this period is very challenging. Given a key in the state, this key active value might be in the new tree or still in the RO MPT.

- After Stage 2 is finished (i.e., conversion is over)

- We can leverage all the expected benefits:

- Smaller proofs.

- Faster generation and verification.

- Easily SNARKifiable.

- Single root for proving (i.e., the state lives in a unified tree, and not account+storage tries)

- We can leverage all the expected benefits:

Syncing

EL client syncing gets temporarily more complex while the state conversion is running. Since the state exists both in the RO MPT and the new tree, syncing both is required.

The RO MPT syncing has two potentially relevant sub-stages:

- Before RO MPT finalization, healing phases of snap sync are expected to be required.

- After RO MPT finalization, no healing phases are required. The finalized RO MPT root could be transformed into a flat-file state description that can be downloaded and reconstructed from the tree from the leaves.

The new tree will use whatever new syncing mechanism is designed for it.

Syncing isn’t a trivial topic, so more research and experimentation are required.

EIP-7612

Overview

Two EIPs are proposing a new tree to replace the Merkle Patricia Trie(s) (MPT). For more information, refer to EIP-6800 and EIP-7864.

The EIP-7612 describes the first step in this transition. Although its title mentions Verkle Trees, it can be applied to any target tree. It is also a stepping stone in a more general strategy for a full tree conversion.

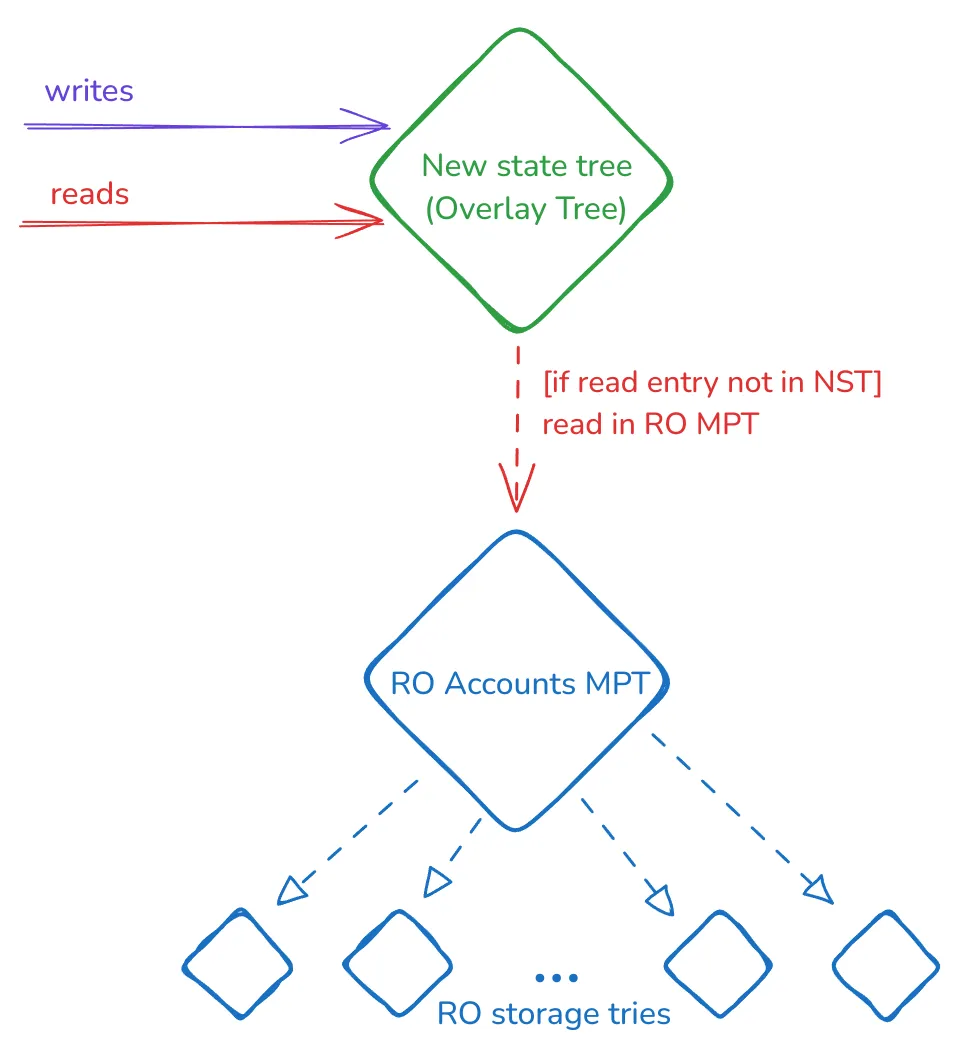

This EIP approach is simple: introduce the empty new tree (Overlay tree) while keeping the existing MPTs, which are now read-only. This construction can be depicted as:

Read and write rules

When the EIP is activated (i.e., FORK_TIME), this Overlay Tree works in the following way:

- On a write operation for a key:

- Store it in the new tree.

- We don’t delete, update, or insert the key in the MPT, being the account or storage trie for an account. MPTs are read-only and can (and will) have missing or stale values.

- On a read operation for a key:

- Read the key in the new state tree:

- If the key exists, return its value. If it doesn’t, move to the next step.

- Read in the corresponding account/storage trie and return the result.

- Read the key in the new state tree:

Block state root

For all the blocks after the EIP activation timestamp, the block state root is the root of the introduced tree. The read-only account MPT root won’t be part of the block, but since it’s read-only, we can assume its root is the latest block before EIP activation.

State proofs and syncing

Please refer to the corresponding sections in the State Conversion chapter: State Proofs and Syncing.

EIP-7748

Recommended background

We highly recommend reading the high-level explanation for state conversion in the State Conversion chapter and the EIP-7612 explanation.

Big picture

This conversion EIP can be compactly described as:

Starting at block with timestamp greater or equal to CONVERSION_START_TIMESTAMP, on every block migrate CONVERSION_STRIDE conversion units from the read-only MPTs to the active tree. Do this until no more conversion units are remaining.

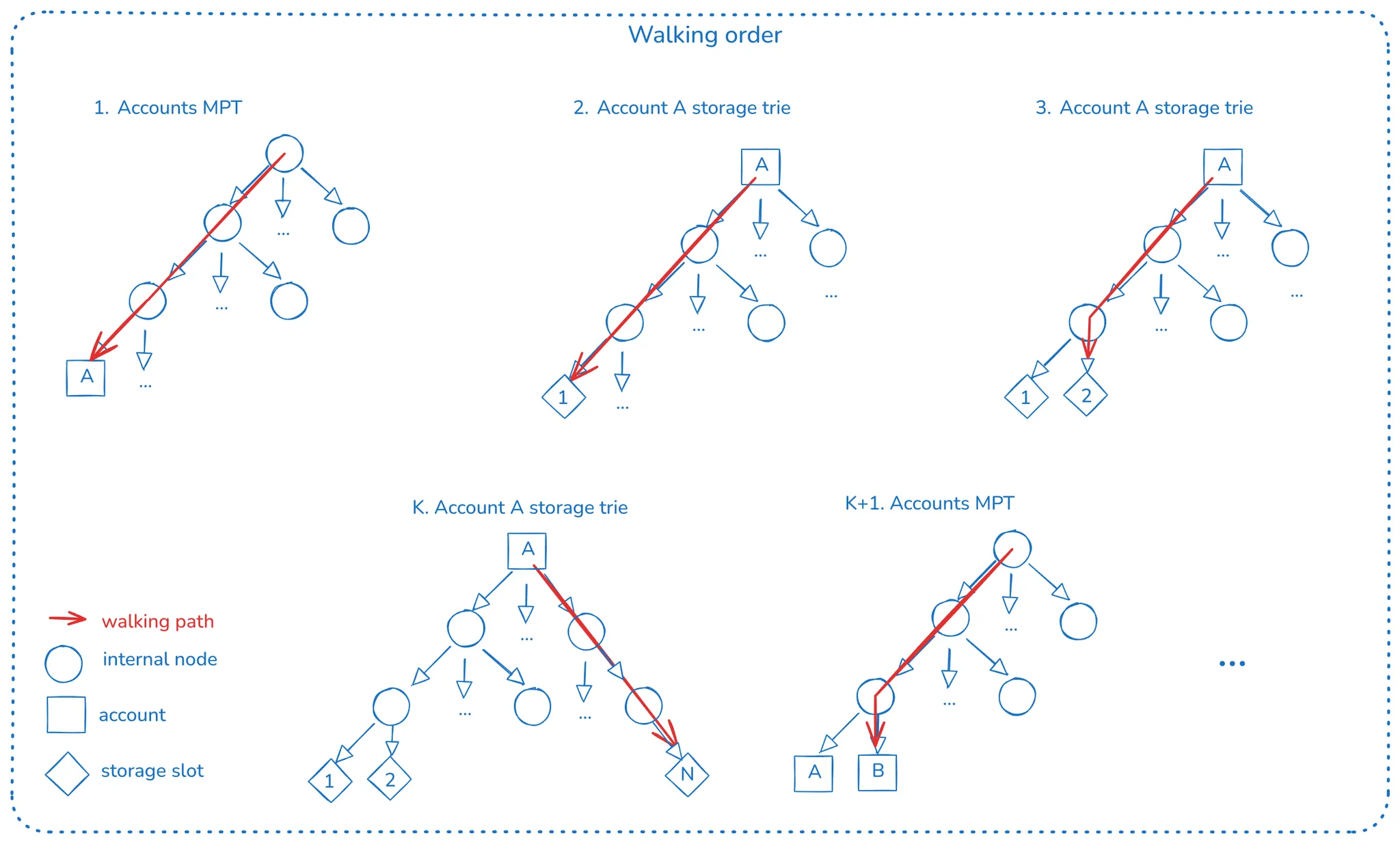

To migrate the data from the MPT(s) to the new tree, a deterministic walk is done in the MPT(s), moving conversion units to the new tree. We’ll explain conversion units soon, but let’s focus only on the walking algorithm.

The walking algorithm is a depth-first walk, meaning that we walk the account’s MPT, and when a leaf is found, we do a depth-first walk into its storage trie (if not empty).

The above image summarizes how the walking is done:

- When we reach account A, we walk into its storage trie, copying all storage slots to the new tree.

- After finishing the storage trie walk, we copy

Account Adata (nonce, balance, and chunkified code). - We continue walking the account’s MPT, now standing on account B.

- We walk the storage trie (if any, since if B is an EOA, this tree is empty) and continue the process.

Since all the MPTs are read-only from the start of the conversion process, the walk is guaranteed to be complete, and thus, the conversion will finish.

This process of walking and copying is done within the block execution pipeline, where only CONVERSION_STRIDE items are copied to the new tree. The walk and copying continue to the next tree, so the whole copying process spans multiple blocks. This is done to put a bound on the overhead of state conversion per block.

CONVERSION_STRIDE value

Choosing the correct value for CONVERSION_STRIDE is important since each step of the walk implies:

- CPU overhead of re-hashing the corresponding key.

- IO overhead since we’re copying data to the new tree.

- CPU overhead is added to the block state root computation since the new tree has extra writes apart from the ones generated by the execution of the transactions.

Previously run proof of concepts done in Geth for Verkle Trees has shown that the CPU overhead of re-hashing the keys is the main bottleneck. But note that Verkle Trees have orders of magnitude more CPU overhead compared to Binary Trees, which are considered hash functions, so it depends on the target tree.

Conversion units

The EIP introduces a concept of conversion units to precisely describe what counts as a moved item for the CONVERSION_STRIDE limit. As currently defined, a conversion unit is:

- Account data: nonce, balance, code hash, and code (if any).

- An account’s storage slot.

Note that one conversion unit implies one or more leaf insertions in the new tree. Both Verkle and Binary propose a unified tree that includes accounts data, storage slots, and accounts chunkified code:

- An account conversion unit implies creating at least two new tree entries:

- BASIC_DATA leaf, containing the version, nonce, balance, and code size.

- CODE_HASH leaf, containing the code hash.

- Depending on the code-chunk size, we’d have to insert N leaves corresponding to each code chunk for the account’s code (if any).

- A storage slot conversion unit implies creating exactly one entry since each storage slot is also a single leaf in the new tree.

The proposed EIP includes in its rationale an important point on why we don’t count each code chunk as an independent conversion unit:

If an account has code, this is chunked and inserted in the VKT in one go. An alternative is including a

CodePhaseand let each inserted chunk consume one unit ofCONVERSION_STRIDE. We decided to not do this to reduce the algorithm complexity. Considering the current maximum code size, the worst case scenario for a block could overflow theCONVERSION_STRIDElimit by 24k/31~=793 units.

At first, this concept might sound over-engineered, but this is because the old tree (MPTs) and the new tree (Verkle/Binary) don’t have the same data in leaf levels. First, the MPTs don’t contain an account code but only the code hash, while the new tree includes code chunks as tree entries. Also, account data such as nonce, balance, and code hash are stored in a single MPT leaf but in more than one in the new tree.

State conversion duration

Considering that the MPTs are read-only, we can determine how many conversion units should be found in the walking. Since we know we copy up to CONVERSION_STRIDE conversion units per block, we can calculate how many blocks the conversion will take.

Note that the migration happens per block and not per slot. The wall clock duration of the conversion process depends on how many missed slots happen in the chain during the conversion process.

Stale data

Note that there are many ways in which a conversion unit we intend to move to the new tree contains stale data and must avoid overriding the value:

- Before EIP-7748 activation, EIP-7612 was activated; thus, the new tree was already getting writes from previous block executions. This means that for a key in the MPT, a more recent value can already exist in the new tree.

- If that didn’t happen before the state conversion started, it can happen during the conversion anyway.

Some examples:

- Conversion unit corresponds to the account’s data: if there was a previous transaction execution that wrote to the account’s balance, this means the MPT balance is stale. Copying the code chunks is still required since transaction execution never triggers code chunkification for existing contracts.

- Conversion unit corresponding to accounts storage slot: a previous transaction could have triggered a write to the storage slot. Thus, the storage slot value in the MPT is stale. Although this value isn’t copied, it still counts since we had to check it.

Pre-EIP-161 accounts

The state conversion is also an opportunity to delete EIP-161 accounts so we can clean them up once and for all instead of passively if they’re touched during block execution.

Preimages

Moving the conversion units from the MPT to the new tree has a catch. Given an entry in the RO MPT, it will live in a different tree key in the new tree since the new tree defines a new way of calculating keys.

This means that the new key has to be computed, so you require the preimage of the MPT key since it’s a hash. This is a big topic that we’ll dive more into next.

Preimage generation and distribution

[Note: this page is heavily based on an existing research post from one of the book authors]

Recommended background

This topic is diving into a subject that can’t be understood correctly in isolation; we recommend previous reading of:

Context

As mentioned in the EIP-7748 Preimages section, we need to calculate new keys when conversion units are moved from the MPTs to the new tree. This calculation requires having the necessary data.

For any of the proposed new trees, we need to calculate the new tree key for:

- An account: we require the account’s address.

- A storage slot: we require the account’s address and storage slot number.

- A code-chunk: we require the contract’s address and its code.

When we do the EIP-7748 walking, clients only have access to the MPT key, which results from hashing addresses or storage slots. Most clients only have these hashed values, so they don’t have enough information to do the listed new tree key calculations. They must have a preimages file to map each MPT key hash to the preimage.

Preimages file

Now we dive into different dimensions of this preimage file:

- Distribution

- Verifiability

- Generation and encoding

- Usage

Distribution

Given that the preimage file is somehow generated, how does this file reach all nodes in the network? The consensus is that it’s probably OK to expect clients to download this file through multiple CDNs. This is compared to relying on alternatives like the Portal network, in-protocol distribution, or including block preimages.

Other discussed options are:

- Having an in-protocol p2p distribution mechanism.

- Distributing the required preimages packed inside each block.

This topic is highly contentious since these options have different tradeoffs regarding complexity, required bandwidth in protocol hotpaths, and compression opportunities. If you’re interested, there’s an older document summarizing many discussions around the topic.

Verifiability

As mentioned above, full nodes will receive this file from somewhere that can be a potentially untrusted party or a hacked supply chain. If the file is corrupt or invalid, the full node will be blocked at some point in the conversion process.

The file is easily verifiable by doing the described tree walk, reading the expected preimage, calculating the keccak hash, and verifying that it matches the client’s expectations. After this file is verified, it can be safely used whenever the conversion starts, with the guarantee that the client can’t be blocked by resolving preimages — having this guarantee is critical for the stability of the network during the conversion period. This verification time must be accounted for in the time delay between EIP-7612 activation and EIP-7748 CONVERSION_START_TIMESTAMP.

Of course, other ways to verify this file are faster but require more assumptions. For example, since anyone generating the file would get the same output, client teams could generate it themselves and hardcode the file’s hash/checksum. When the file is downloaded/imported, the verification can compare the hash of the file with the hardcoded one.

Generation and encoding

Now that we know which information the file must contain and in which order this information will be accessed, we can consider how to encode this data in a file. Ideally, we’d like an encoding that satisfies the following properties:

- Optimize for the expected usage reading pattern: the state conversion is a task running in the background while the main chain runs, so reading the required information should be efficient.

- Optimize for size: as mentioned before, the file has to be distributed somehow. Bandwidth is a precious resource; using less is better.

- Low complexity: this file will only be used once, so a simple encoding format is good. It doesn’t make sense to reinvent the wheel by creating new complex formats unless they offer exceptional benefits while taking longer to spec out and test.

There’s a very simple and obvious candidate encoding that can be described as follows following the example we explored before: [address_A][storage_slot_A][storage_slot_B][address_B][address_C][storage_slot_A].... We directly concatenate the raw preimages next to each other in the expected walking order.

This encoding has the following benefits:

- The encoding format has zero overhead since no prefix bytes are required. Although preimage entries have different sizes (20 bytes for addresses and 32 bytes for storage slots), the EL client can know how many bytes to read next depending on whether they should resolve an address or storage slot in the tree walk.

- The EL client always does a forward-linear read of the file, so there are no random accesses. The upcoming Usage section will expand on this.

If you are interested in knowing how this file can be generated and some analysis about the efficiency of the format, see this research post.

Usage

It is worth mentioning some facts about how EL clients can use this file:

- Since the file is read linearly, persisting a cursor indicating where to continue reading from at the start of the next block is useful.

- Keeping a list of cursor positions for the last X blocks helps handle reorgs. If a reorg occurs, it’s very easy to seek into the file to the corresponding place again.

- Clients can also preload the next X blocks preimages in memory while the client is mostly idle in slots, avoiding extra IO in the block hot path execution.

- If keeping the whole file on disk is too annoying, you can delete old values past the chain finalization point. We doubt this is worth the extra complexity, but it’s an implementation detail up to EL client teams.

Use cases

Stateless Ethereum allows the state of Ethereum to be proven much more efficiently, which has deep implications for the ecosystem.

As explained in this book introduction, the two main motivations are:

- Increasing the gas limit: allowing the Ethereum state to be efficiently proven in SNARKs decouples block verification time from the gas limit.

- Improved decentralization: not requiring disk space to run an EL client allows low-powered devices to join the network and contribute to its security, fulfilling The Verge’s goal of simplifying block validation.

But the above only scratches the surface of the implications of going stateless. This is an active area of research, and we invite everybody to imagine what is possible and share their ideas.

Next, we dive deeper into which use cases going stateless unlocks. More use cases will be added to this chapter in the following months.

Stateless clients

Overview

A stateless client is a client that can trustlessly verify a blockchain block without storing the whole Ethereum state. Trustlessly is the keyword here — compared with light clients, which rely on an external party to provide the required state to verify the block. Stateless clients can also be named secure light clients.

But what are the blockers for stateless clients to be a reality? As explained in the Trees chapter, the Ethereum state is merkelized; thus, we can create Merkle proofs. However, the problem relies on how big these proofs are, which can become a problem for network distribution and security. All the protocol changes described in this book aim to allow efficient enough proofs to enable stateless clients to participate in the network sustainably and in the worst-case scenarios.

Block verification and required state

Let’s better understand the relationship between the Ethereum state and block verification.

To do this, let’s imagine we’re trying to verify a block that contains a single transaction sending 10 ETH from account A to account B. Omitting some details and just using common sense, we need to check that account A has enough balance for the ETH transfer and gas fees. If this isn’t the case, the transaction must fail.

Note that when a block is received with this transfer, an EL client can’t predict which will be account A, so a priori must have this information for all Ethereum accounts. Not doing so means that a potential transaction would make verifying this block impossible for a stateless client. Stateful clients could still verify, but presumably be the minority. More advanced transactions, such as ones that execute contracts, work the same — clients must have accounts’ contract code and storage state since blocks can contain transactions involving any existing contract.

The main insight is that given a block with transactions, the amount of state that we need to verify the block is orders of magnitude smaller than the complete state. Even further, there’s Ethereum state that hasn’t been used for years but is still required since at any point in time a new transaction touching this state could appear. This tangentially surfaces the need for supporting State Expiry in the protocol, which will be a future chapter in this book.

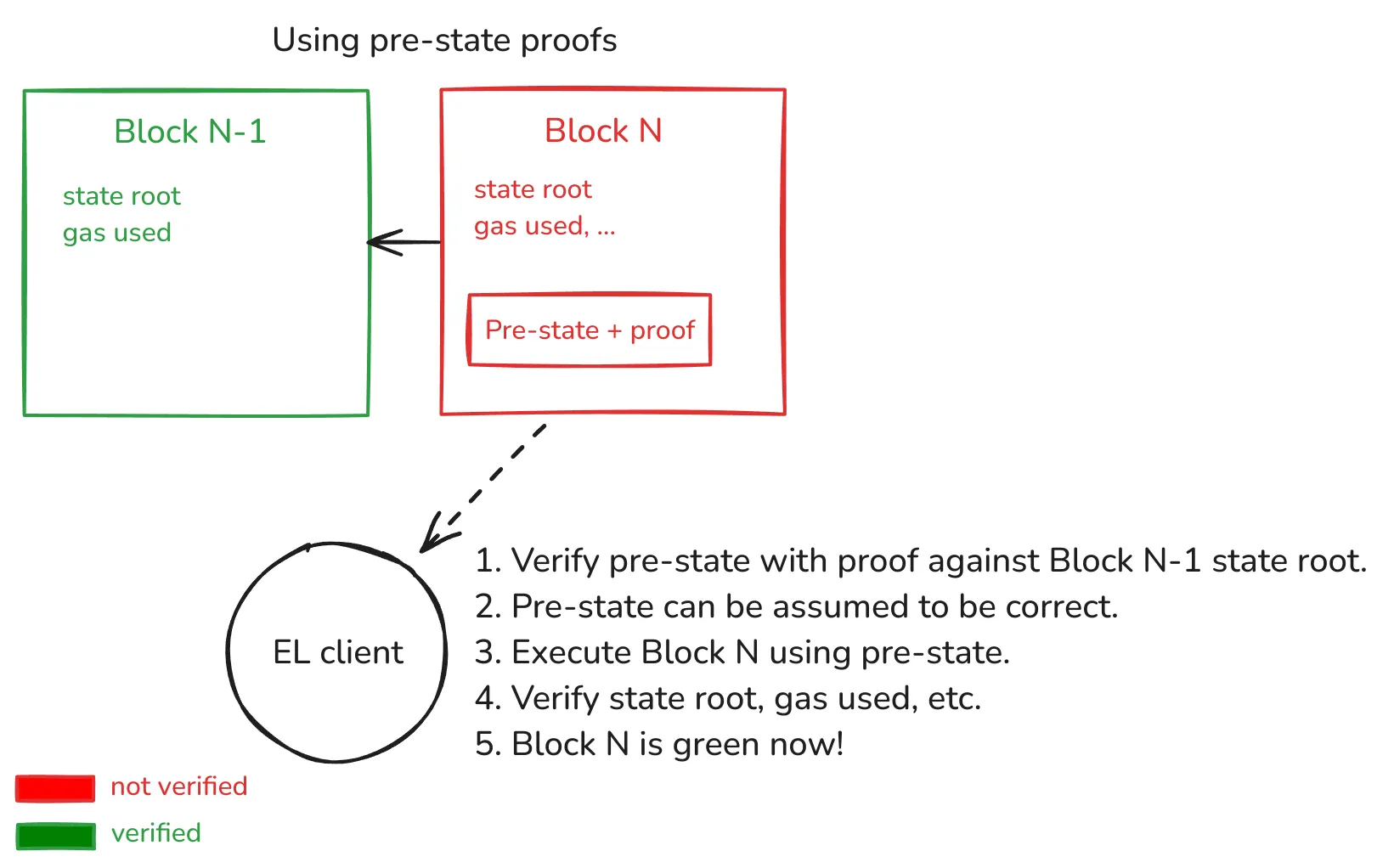

How would they work?

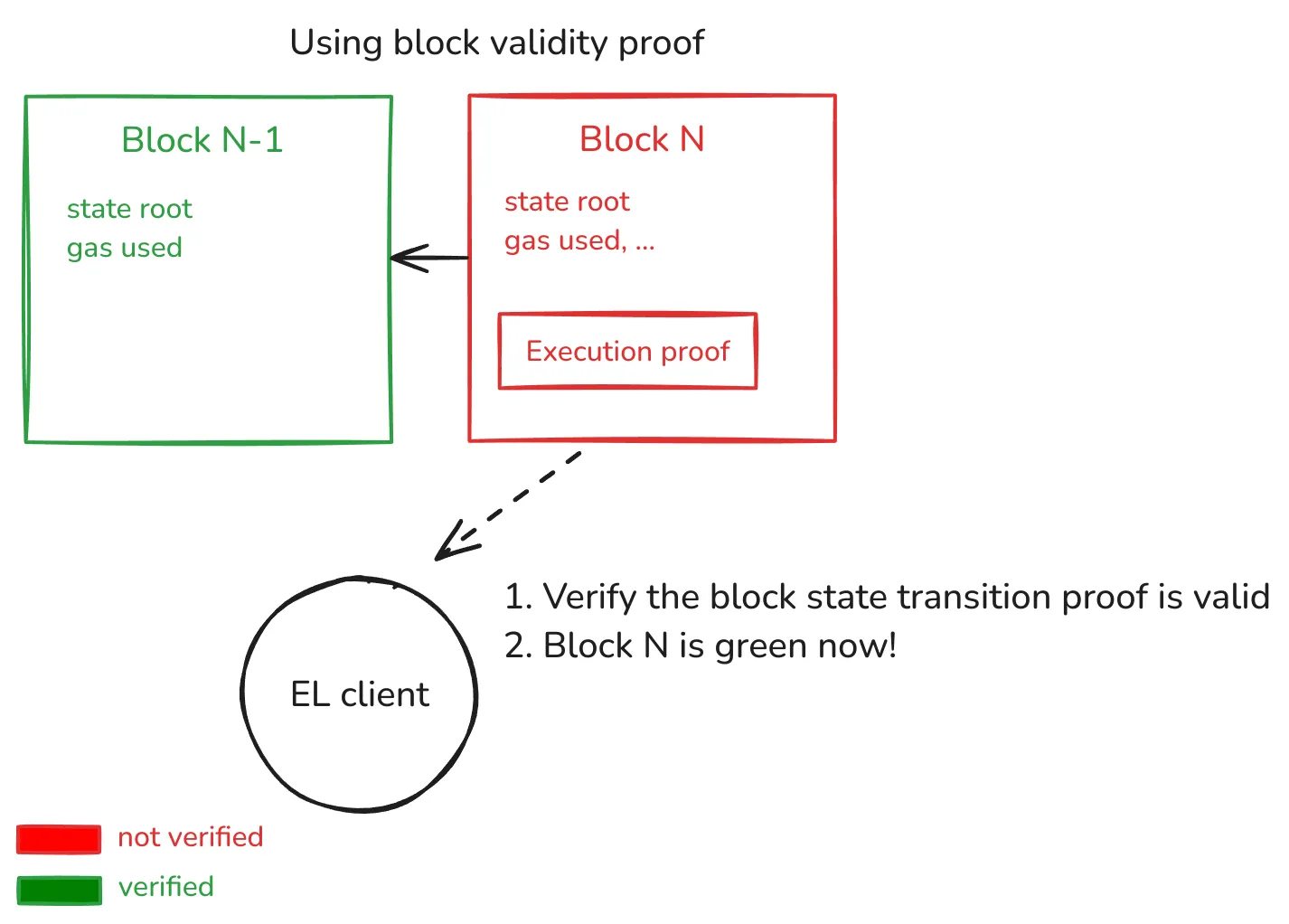

The following diagram depicts how a stateless client relying on pre-state proofs would work:

In a fully SNARKified L1 world:

In this setup, the proof is fully proving that the state transition with public inputs:

Block N-1state root (i.e., pre-state).Block Nhash.Block Nstate root (i.e., expected state after executing the tx list on top of pre-state).

This allows us to push further L1 scaling since the EL Client doesn’t have to do any EVM code execution, and the only computational cost is verifying the proof.

There is active research and proposals that might change how execution payloads are validated and executed (e.g., delayed validation). On this page, we stick to how the current protocol works but stay tuned since the book will continue to be updated if these changes are included.

Stateless clients as validators

The above explanations focused on stateless clients verifying the validity of the blockchain without trusting external parties, which is the ethos of Ethereum. However, stateless clients can go further and participate as validators in the network.

Not having the full state means they can’t be block builders since generating the proof indirectly requires having access to the state. This doesn’t mean stateless clients can’t be validators since many validators today delegate the task of block builders to external parties (e.g., using MEV-boost). They could still receive the candidate block with the corresponding proof, verify it is correct, and then propose it to the network.

The implications of stateless validators are an active area of research since they have deep implications for network topology and security. Soon, new chapters will be added to the book to explore this topic further.

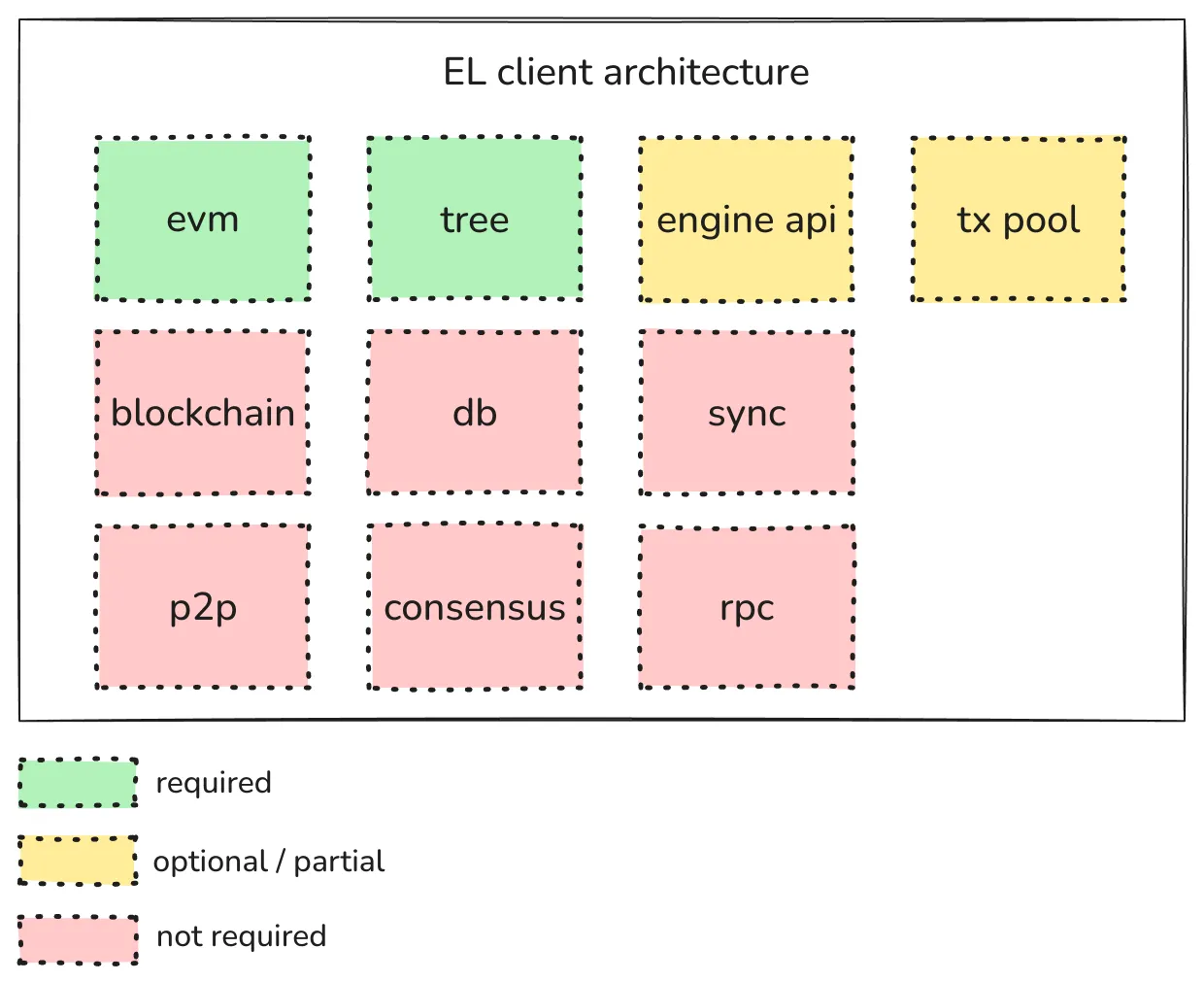

Stateless client architecture

One benefit of having stateless clients is that they’re simpler to implement compared with a full node.

Let’s look at the following diagram:

- An EVM implementation is required since block execution is needed.

- A Tree implementation is required since the new state root must be calculated.

- The Engine API and Txn pool should be implemented partially or optionally.

- A full node involves the rest of the components but isn’t strictly required by a stateless client.

Resources

Over the past years, many articles and talks have been made about stateless, mainly on the topic of Verkle Trees. With recent updates to the roadmap, we will continue expanding this section, increasing our coverage of Binary Trees as well.

Introductory material

- Why stateless? - Dankrad Feist’s introduction on his blog

- Possible futures of the Ethereum protocol, part 4: The Verge - Vitalik’s latest update on the stateless roadmap

- Verkle Trees - Vitalik’s intro on Verkle Trees

Video presentations

- Anatomy of a stateless client - Guillaume Ballet, April 2024: an overview of how stateless improves client maintainability

- Recipes for a Stateless Ethereum - Guillaume Ballet, March 2024: a good summary of the use cases of stateless Ethereum

- Verkle Trees 101 - Guillaume Ballet, Ignacio Hagopian, Josh Rudolf, April 2024 - an overview of the EIPs used in Verkle Trees (a lot of it still current for Binary Tress)

- Verkle sync: bring a node up in minutes - Guillaume Ballet, Tanishq Jasoria, September 2023 - a presentation of how stateless clients improve sync algorithms

- The Verge: Converting the Ethereum State to Verkle Trees - Guillaume Ballet, July 2023 - a presentation of the conversion process

- Ava Labs Systems Seminar: Verkle trees for statelessness - Guillaume Ballet, October 2023 - a comprehensive overview of the states of stateless development as of October 2023

- DevCon: How Verkle Trees Make Ethereum Lean and Mean - Guillaume Ballet, Oct 2022

- Verkle Tries for Ethereum State - Dankrad Feist, Sept 2021 - PEEPanEIP presentation

Advanced material

Overviews

- Overview of tree structure - a blog post describing the structure of a Verkle Tree, which is still current with the Binary Tree proposal.

- Overview of cryptography used in Verkle

- Anatomy of a Verkle proof - same as above

- Stateless and Verkle Trees (video) - a dated presentation giving an overview of the stateless effort and Verkle Trees in particular

State expiry

- https://ethresear.ch/t/state-expiry-in-protocol-vs-out-of-protocol/23258

- https://ethresear.ch/t/the-future-of-state-part-1-oopsie-a-new-type-of-snap-sync-based-wallet-lightclient/23395/1

- https://ethresear.ch/t/the-future-of-state-part-2-beyond-the-myth-of-partial-statefulness-the-reality-of-zkevms/23396/1

- https://ethresear.ch/t/compression-based-state-expiry/23443

Analysis

- https://ethresear.ch/t/ethereum-bytecode-and-code-chunk-analysis/22847

- https://ethereum-magicians.org/t/not-all-state-is-equal/25508/3

- https://ethresear.ch/t/data-driven-analysis-on-eip-7907/23850

- https://ethresear.ch/t/a-small-step-towards-data-driven-protocol-decisions-unified-slowblock-metrics-across-clients/23907

State gas costs

- https://ethereum-magicians.org/t/the-case-for-eip-8032-in-glamsterdam-tree-depth-based-storage-gas-pricing/25619

- https://ethereum-magicians.org/t/eip-8058-contract-bytecode-deduplication-discount/25933

Misc

- https://ethresear.ch/t/a-short-note-on-post-quantum-verkle-explorations/22001

Advanced technical write-ups

- Inner Product Argument - Dankrad Feist

- PCS Multiproof - Dankrad Feist

- Deep dive into Circle-STARKs FFT - Ignacio Hagopian

EIPs

Included

Championned

- EIP-4762, gas costs changes for Verkle Trees

- EIP-7709, bypass the EVM to read block hashes in the state

- EIP-7612 and EIP-7748, about the state tree conversion

- EIP-7864, about the proposed scheme for Binary Trees

- EIP-8037, State Creation Gas Cost Increase

- EIP-8038, State-access gas cost update

- EIP-8125, Temporary Contract Storage

Proposed, but not actively championned

- EIP-2584, Trie format transition with overlay trees

- EIP-2926, MPT-based code chunking

- EIP-3102, binary trees

- EIP-6800, structure of a Verkle Tree

- EIP-7736 (verkle) leaf-based state expiry

- EIP-7545, proof verification precompile

- EIP-8032, Size-Based Storage Gas Pricing

- EIP-8058, Contract Bytecode Deduplication Discount

Measurements & Metrics

Development

The future of Ethereum stateless is currently in active development, including research, protocol development, and UX impact.

Stateless community

A diverse set of people and teams are working on all these fronts, including:

- Teams from the Ethereum Foundation led by Stateless Consensus and supported by teams such as the Applied Research Group, Robust Incentives Group, STEEL, Devops, and Cryptography.

- Multiple core developers from EL clients such as Nethermind, Geth, EthereumJS, and Besu.

- Many independent contributors from the Ethereum ecosystem.

Ethereum Fellowship Program (EFP)

Stateless Ethereum has also been part of multiple past EFP projects:

- Cohort three (late-2022):

- Cohort four (mid-2023):

- Cohort five (mid-2024):

Grants

Moreover, the following are ongoing grants actively working on many fronts:

- Stateless expiry (EIP-7736) PoC using Verkle Trees

- Zig EVM implementation to be used in a future stateless Zig client.

If you’re interested in working on any specific stateless topic and need a grant, please send your proposal to stateless@ethereum.org for consideration!

Mainnet analysis

This section contains multiple analysis done on how stateless protocol changes would behave on mainnet. This helps to understand how different properties such as proof sizes, UX impact, and others would behave on mainnet state access patterns.

Verkle replay

Context

This data is gathered replaying ~200k historical blocks around the Shanghai fork. While they do not give a perfect view of how execution will behave once EIP-4762 is active, it still gives a good indication of what to expect, and how the spec should evolve.

Witnesses

This section explores multiple dimensions of the execution witness design.

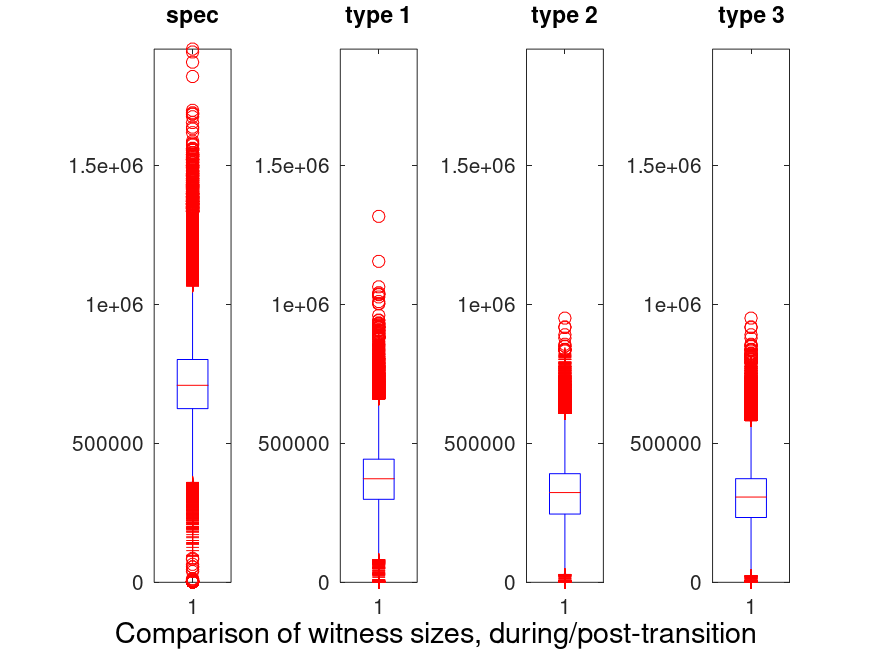

Size

Research has been conducted, in order to determine the best structure for a witness. We tried four methods:

- The first one, as specified in the current version of the consensus spec, referred to as type 0. This approach uses the SSZ type

Optional[T], that is yet to be officially included in the spec. - A first variation of the spec, in which prestate values are grouped together in their own list, and poststate values are grouped in their own list as well. This is referred to as type 1.

class SuffixStateDiffs(Container):

suffixes: bytes

# None means not currently present

current_values: List[bytes]

# None means value not updated

new_values: List[bytes]

- A second variation of the spec, in which updates, insertions and reads are grouped in their own separate lists. The suffixes for each of these lists are also grouped as their own byte lists. This is referred to as type 2.

class SuffixStateDiffs(Container):

updated_suffixes: bytes

updated_currents: List[Bytes32]

updated_news: List[Bytes32]

inserted_suffixes: bytes

inserted_news: List[Bytes32]

read_suffixes: bytes

read_currents: List[Bytes32]

missing_suffixes: bytes

- A final variation, where update, reads, inserts and proof-of-absences are grouped, along with their suffixes, into their own lists. This is referred to as type 3.

class UpdateDiff(Container):

suffix: byte

current: Bytes32

new: Bytes32

class ReadDiff(Container):

suffix: byte

current: Bytes32

class InsertDiff(Container):

suffix: byte

new: Bytes32

class MissingDiff(Container):

suffix: byte

class StemStateDiff(Container):

stem: Bytes31

updates: List[UpdateDiff]

reads: List[ReadStateDiff]

insert: List[InsertDiff]

missing: List[MissingStateDiff]

Here are our findings after replaying data:

One can see that the spec is much less efficient than the other types. This is due to the inefficiency of storing Optional[T]. As a result, we didn’t include this method in the rest of the analysis.

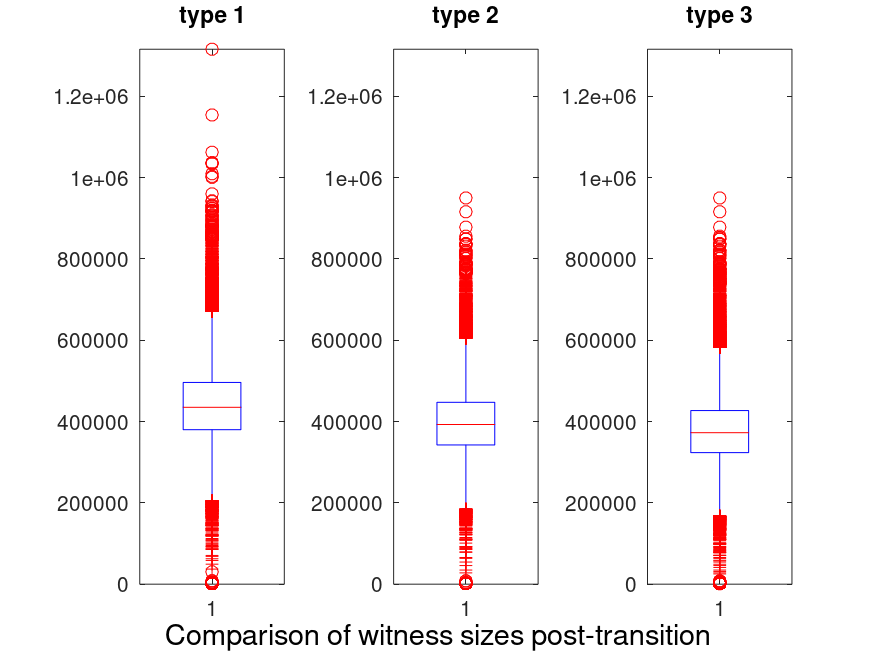

When only considering witnesses that were produced after the whole state was converted to verkle, this is what is found:

The maximum and median observed sizes for each type, are summarized in the following table:

| Name | Median (KB) | Max (KB) |

|---|---|---|

| type 1 | 425 | 1285 |

| type 2 | 383 | 928 |

| type3 | 380 | 928 |

While type 2 and type 3 are quite close, one can see that type 3 has better average and maximum sizes.

Witness size breakdown (type 3)

Replaying past blocks, we generated the witnesses and could provide the following size breakdown for type 3 witnesses. This is averaged over ~134k blocks, as the conversion blocks were skipped.

| Component | avg size % | min size % | max size % |

|---|---|---|---|

| keys | 13 | 9 | 49 |

| pre-values | 78 | 7 | 85 |

| post-values | 0.1 | 0 | 47 |

| verkle proof | 12 | 8 | 77 |

Takeaways

The key takeaways are:

- Type 3 witnesses offer a great compression.

- Although post-state values don’t take much size on average, they can take up almost half of it in the worst case. It would make sense to remove them, as validating them requires block execution.

Database